Akana Platform Database Maintenance

A guide on how to maintain the database.

Table of Contents

- Introduction

- Types of volatile data

- Using the built-in jobs

- Leveraging cron to delete data

- Partitioning large data stores

- Partitioning large data stores under load (MySQL Only)

- Dropping partitions

- MongoDB maintenance

- Recommended MongoDB indexes

- MongoDB: metric rollup data cleanup

- How to add entries to the crontab to create the cron job

- How to add an email address within a cron job entry

- How to configure the wait_timeout and max_idle_time value

Introduction

This document describes the types of data that require regular archiving and ways to implement it.

Types of volatile data

There are several key tables that require regular maintenance:

Policy Manager Tables:

| Table Name | Description |

|---|---|

| AM_ALERTS | Stores the fired alerts in the system. |

| AM_ALERTS_SLAS | Stores the fired SLA alerts in the system. |

| AM_EMAILALERTS | Stores the fired email alerts in the system. |

| MO_USAGEDATA** |

Stores the metadata about the Service/API calls managed by the API Gateway. This is controlled by the auditing policy. See note below. |

| MO_USAGE_NEXTHOP** |

Stores references to downstream (next hop) usage data records. See note below. |

| MO_USAGEMSGS** |

Stores the Service/API calls (request/response/fault) message contents. This is controlled by the auditing policy. See note below. |

| MO_ROLLUPDATA | Stores 5-second rollup data transmitted by each Network Director. |

| MO_ROLLUP15 | Stores 15-minute rollups derived from the above table by the Policy Manager Scheduled Jobs. |

| MO_ROLLUP_HOUR | Stores hourly rollups derived by the Policy Manager Scheduled Jobs. |

| MO_ROLLUP_DAY | Stores daily rollups derived by the Policy Manager Scheduled Jobs. |

| MO_ROLL_ORG15 | Stores 15-minute rollups per business (organization) derived by the Policy Manager Scheduled Jobs. |

| MO_ROLL_ORG_H | Stores hourly rollups per business (organization) derived by the Policy Manager Scheduled Jobs. |

| MO_ROLL_ORG_D | Stores daily rollups per business (organization) derived by the Policy Manager Scheduled Jobs. |

**Note: These tables can potentially contain VERY large quantities of data and will require special handling such as partitioning.

Community Manager Tables:

| Table Name | Description |

|---|---|

| BOARD_ITEMS | Stores the tickets, discussions and reviews. |

| COMMENTS | Stores the comments on the board items above (Has FK with BOARD_ITEMS with cascading delete). |

| COMMENT_MARKS | Stores the like/thumbs-up votes for the comments (Has FK with COMMENTS with cascading delete). |

| API_CONTRACT_SLA_DATA | Inserts the records to the API_CONTRACT_SLA_DATA table at a specified interval when a Service Level and/or Service Level Enforcement QOS policy is configured with a contract that includes a license, which is attached to the API. The interval can be set while creating the QOS policy. |

**Note: The API_CONTRACT_SLA_DATA table can grow exponentially depending on the number of APIs configured with a contract that includes a license with a QOS policy attached to it. To avoid this table size from growing, we recommend the following:

-

Create a job that runs on a daily, weekly, or monthly basis as required to delete older data from the table.

-

If feasible, increase the SLA time interval in the QOS policy, so the job writes less frequently to the API_CONTRACT_SLA_DATA table.

Using the built-in jobs

Policy Manager has a built-in capability to delete/archive data in the MO_USAGE* and MO_ROLL* tables. These jobs run every hour and can add significant overhead to the Policy Manager container, so are best suited to low-throughput environments with limited retained data.

To configure the built-in jobs, go to the Policy Manager Administration Console > Configuration tab > com.soa.rollup.delete.old. The settings in this screen can be summarized as follows:

- *.enable—enable/disable the job

- *.exportDeleted—set to true if you want the data exported on delete

- *.unit—the time unit to retain data (hour, week, month, year)

- *.windowSize—the number of units to retain data

For example, if you want to delete all the 15-minute rollup data more than 1 week old, you would set:

monitoring.delete.rollup.MO_ROLLUP15.enable=true monitoring.delete.rollup.MO_ROLLUP15.exportDeleted=false monitoring.delete.rollup.MO_ROLLUP15.unit=week monitoring.delete.rollup.MO_ROLLUP15.windowSize=1

Note: There is no scheduled job to delete/archive Alerts or any Community Manager data. This is best done using a cron job as described in the next section.

To disable the built-in jobs for higher throughput environments, set all *.enable properties to false as follows:

monitoring.delete.rollup.MO_ROLLUP15.enable=false monitoring.delete.rollup.MO_ROLLUPDATA.enable=false monitoring.delete.rollup.MO_ROLLUP_DAY.enable=false monitoring.delete.rollup.MO_ROLLUP_HOUR.enable=false monitoring.delete.rollup.MO_ROLL_ORG15.enable=false monitoring.delete.rollup.MO_ROLL_ORG_D.enable=false monitoring.delete.rollup.MO_ROLL_ORG_H.enable=false monitoring.delete.usage.enable=false

Leveraging cron to delete data

The cron is a Linux utility program that lets you input commands for scheduling tasks repeatedly at a specific time. The tasks scheduled in cron are called cron jobs. The cron jobs are created by the user and they can determine what kind of tasks to automate and the interval at which the tasks should be executed.

A cron file is a simple text file that contains commands to run tasks periodically at a specific time. To perform Akana database maintenance activities, you can create and configure the cron jobs to run on the machine that hosts the database. A cron job will perform periodic cleanup of the volatile data contained in the tables within the schema used by Akana and can be executed on a regular basis to keep the overall size of the database in check.

For higher throughput environments, it's better to offload the task to delete/archive data by simply executing scripts directly against the database using a cron job. The following scripts will delete all:

- next-hop data in MO_USAGE_NEXTHOP older than 1 month

- usage messages in MO_USAGEMSGS older than 1 month

- usage data in MO_USAGEDATA older than 1 month

- 5-second rollup data in MO_ROLLUPDATA older than 1 month

- 15-minute rollup data in MO_ROLLUP15 older than 1 month

- 15-minute rollup data in MO_ROLL_ORG15 older than 1 month

- 1-hour rollup data in MO_ROLLUP_HOUR older than 3 months

- 1-hour rollup data in MO_ROLL_ORG_H older than 3 months

- 1-day rollup data in MO_ROLLUP_DAY older than 1 year

- 1-day rollup data in MO_ROLL_ORG_D older than 1 year

- alerts in AM_ALERTS, AM_ALERTS_SLAS, and AM_EMAILALERTS older than 1 month

- SLA data in API_CONTRACT_SLA_DATA older than 1 year

Note: The intervals given here are sample time periods for the purpose of illustration. You can adjust time periods as needed depending on how often you want to run the job and how long you want to retain the data.

The examples below illustrate how you can run the above scripts, for different database types:

- Deleting/archiving data: MySQL

- Deleting/archiving data: Oracle

- Deleting/archiving data: Microsoft SQL Server

Deleting/archiving data: MySQL

The example below shows database queries to run for a MySQL database.

The intervals given here are recommendations. Modify the intervals according to your needs.

/* Queries to clean-up the data from Usage / Rollup tables */ delete from MO_USAGE_NEXTHOP where REQUESTDTS < TIMESTAMPADD(MONTH, -1, now()); delete from MO_USAGEMSGS where MSGCAPTUREDDTS < TIMESTAMPADD(MONTH, -1, now()); delete from MO_USAGEDATA where REQUESTDTS < TIMESTAMPADD(MONTH, -1, now()); delete from MO_ROLLUPDATA where ROLLUPDATAID < (select min(MAX_ID) from MO_STATUS) and INTVLSTARTDTS < TIMESTAMPADD(MONTH, -1, now()); delete from MO_ROLLUP15 where INTVLSTARTDTS < TIMESTAMPADD(MONTH, -1, now()); delete from MO_ROLL_ORG15 where INTVLSTARTDTS < TIMESTAMPADD(MONTH, -1, now()); delete from MO_ROLLUP_HOUR where INTVLSTARTDTS < TIMESTAMPADD(MONTH, -3, now()); delete from MO_ROLL_ORG_H where INTVLSTARTDTS < TIMESTAMPADD(MONTH, -3, now()); delete from MO_ROLLUP_DAY where INTVLSTARTDTS < TIMESTAMPADD(YEAR, -1, now()); delete from MO_ROLL_ORG_D where INTVLSTARTDTS < TIMESTAMPADD(YEAR, -1, now()); /* Queries to clean-up the data from ALERTS tables */ delete e from AM_ALERTS a inner join AM_EMAILALERTS e on e.ALERTSID = a.ALERTSID where a.SOURCEDTS < TIMESTAMPADD(MONTH, -1, now()); delete s from AM_ALERTS a inner join AM_ALERTS_SLAS s on s.ALERTSID = a.ALERTSID where a.SOURCEDTS < TIMESTAMPADD(MONTH, -1, now()); delete from AM_ALERTS where SOURCEDTS < TIMESTAMPADD(MONTH, -1, now());

For Community Manager, there are additional tables such as the Boards (Forums) that you might want to clean up:

delete from BOARD_ITEM_ASSIGNMENTS where ITEMID in (select ITEMID from BOARD_ITEMS where ARCHIVABLE='Y' and CREATED < TIMESTAMPADD(YEAR, -1, now())); delete from BOARD_ITEMS where ARCHIVABLE='Y' and CREATED < TIMESTAMPADD(YEAR, -1, now()); delete from API_CONTRACT_SLA_DATA where LASTMODIFIEDDTS < TIMESTAMPADD(YEAR, -1, now()); delete from API_SLA_DATA where LASTMODIFIEDDTS < TIMESTAMPADD(YEAR, -1, now());

For sample scripts on MySQL, Oracle, and Microsoft SQL Server, download the following zip archive: sample_scripts.zip.

Once you're satisfied with the script, you can set up a cron job to execute it each night. For example, you could configure cron to execute at 1am each morning as follows:

0 1 * * * /xxx/bin/cleanup.sh

There is an optional detailed logging option -vvv that can be added to each query if you prefer to see details about the query being executed in the final log that is emailed after the cron job is executed. In addition to logging the query being performed, -vvv will also provide an output after a delete query that lists the number of rows deleted by the query. The following is an example of the delete query with the extra logging option added:

mysql -u root -ppassword -D akanasampleschema -vvv -e "delete from MO_USAGE_NEXTHOP where REQUESTDTS < TIMESTAMPADD(MONTH, -1, now());"

Deleting/archiving data: Oracle

The example below shows database queries to run for an Oracle database.

The intervals given here are recommendations. Modify the intervals according to your needs.

/* Queries to clean up the data from Usage / Rollup tables*/ delete from MO_USAGE_NEXTHOP where REQUESTDTS < (SYSTIMESTAMP - INTERVAL '1' MONTH); delete from MO_USAGEMSGS where MSGCAPTUREDDTS < (SYSTIMESTAMP - INTERVAL '1' MONTH); delete from MO_USAGEDATA where REQUESTDTS < (SYSTIMESTAMP - INTERVAL '1' MONTH); delete from MO_ROLLUPDATA where ROLLUPDATAID < (select min(MAX_ID) from MO_STATUS) and INTVLSTARTDTS < (SYSTIMESTAMP - INTERVAL '1' MONTH); delete from MO_ROLLUP15 where INTVLSTARTDTS < (SYSTIMESTAMP - INTERVAL '1' MONTH); delete from MO_ROLL_ORG15 where INTVLSTARTDTS < (SYSTIMESTAMP - INTERVAL '1' MONTH); delete from MO_ROLLUP_HOUR where INTVLSTARTDTS < (SYSTIMESTAMP - INTERVAL '3' MONTH); delete from MO_ROLL_ORG_H where INTVLSTARTDTS < (SYSTIMESTAMP - INTERVAL '3' MONTH); delete from MO_ROLLUP_DAY where INTVLSTARTDTS < (SYSTIMESTAMP - INTERVAL '1' YEAR); delete from MO_ROLL_ORG_D where INTVLSTARTDTS < (SYSTIMESTAMP - INTERVAL '1' YEAR); /* Queries to clean-up the data from ALERTS tables*/ delete from AM_EMAILALERTS e WHERE EXISTS (select a.ALERTSID from AM_ALERTS a where a.ALERTSID = e.ALERTSID and a.SOURCEDTS < (SYSTIMESTAMP - INTERVAL '1' MONTH)); delete from AM_ALERTS_SLAS s WHERE EXISTS (select a.ALERTSID from AM_ALERTS a where a.ALERTSID = s.ALERTSID and a.SOURCEDTS < (SYSTIMESTAMP - INTERVAL '1' MONTH)); delete from AM_ALERTS where SOURCEDTS < (SYSTIMESTAMP - INTERVAL '1' MONTH);

For Community Manager, there are additional tables such as the Boards (Forums) that you might want to clean up:

delete from BOARD_ITEM_ASSIGNMENTS where ITEMID in (select ITEMID from BOARD_ITEMS where ARCHIVABLE='Y' and CREATED < (SYSTIMESTAMP - INTERVAL '1' YEAR)); delete from BOARD_ITEMS where ARCHIVABLE='Y' and CREATED < (SYSTIMESTAMP - INTERVAL '1' YEAR); delete from API_CONTRACT_SLA_DATA where LASTMODIFIEDDTS < (SYSTIMESTAMP - INTERVAL '1' YEAR); delete from API_SLA_DATA where LASTMODIFIEDDTS < (SYSTIMESTAMP - INTERVAL '1' YEAR);

For sample scripts on MySQL, Oracle, and Microsoft SQL Server, download the following zip archive: sample_scripts.zip.

Once you're satisfied with the script, you can set up a cron job to execute it each night. For example, you could configure cron to execute at 1am each morning as follows:

0 1 * * * /xxx/bin/cleanup.sh

Deleting/archiving data: Microsoft SQL Server

The example below shows database queries to run for a Microsoft SQL Server database.

The intervals given here are recommendations. Modify the intervals according to your needs.

Policy Manager cleanup:

/* Queries to clean-up the data from Usage / Rollup tables */ delete from MO_USAGE_NEXTHOP where REQUESTDTS < DATEADD(MONTH, -1, GETDATE()); delete from MO_USAGEMSGS where MSGCAPTUREDDTS < DATEADD(MONTH, -1, GETDATE()); delete from MO_USAGEDATA where REQUESTDTS < DATEADD(MONTH, -1, GETDATE()); delete from MO_ROLLUPDATA where ROLLUPDATAID < (select min(MAX_ID) from MO_STATUS) and INTVLSTARTDTS < DATEADD(MONTH, -1, GETDATE()); delete from MO_ROLLUP15 where INTVLSTARTDTS < DATEADD(MONTH, -1, GETDATE()); delete from MO_ROLL_ORG15 where INTVLSTARTDTS < DATEADD(MONTH, -1, GETDATE()); delete from MO_ROLLUP_HOUR where INTVLSTARTDTS < DATEADD(MONTH, -3, GETDATE()); delete from MO_ROLL_ORG_H where INTVLSTARTDTS < DATEADD(MONTH, -3, GETDATE()); delete from MO_ROLLUP_DAY where INTVLSTARTDTS < DATEADD(YEAR, -1, GETDATE()); delete from MO_ROLL_ORG_D where INTVLSTARTDTS < DATEADD(YEAR, -1, GETDATE()); /* Queries to clean-up the data from ALERTS tables */ delete e from AM_ALERTS a inner join AM_EMAILALERTS e on e.ALERTSID = a.ALERTSID where a.SOURCEDTS < DATEADD(MONTH, -1, GETDATE()); delete s from AM_ALERTS a inner join AM_ALERTS_SLAS s on s.ALERTSID = a.ALERTSID where a.SOURCEDTS < DATEADD(MONTH, -1, GETDATE()); delete from AM_ALERTS where SOURCEDTS < DATEADD(MONTH, -1, GETDATE());

For Community Manager, there are additional tables such as the Boards (Forums) that you might want to clean up:

delete from BOARD_ITEM_ASSIGNMENTS where ITEMID in (select ITEMID from BOARD_ITEMS where ARCHIVABLE='Y' and CREATED < DATEADD(YEAR, -1, GETDATE()); delete from BOARD_ITEMS where ARCHIVABLE='Y' and CREATED < DATEADD(YEAR, -1, GETDATE()); delete from API_CONTRACT_SLA_DATA where LASTMODIFIEDDTS < DATEADD(YEAR, -1, GETDATE()); delete from API_SLA_DATA where LASTMODIFIEDDTS < DATEADD(YEAR, -1, GETDATE());

For sample scripts on MySQL, Oracle, and Microsoft SQL Server, download the following zip archive: sample_scripts.zip.

When you're satisfied with the script, you can set up a batch job to execute it each night. As Windows does not have cron, you could configure the cleanup.bat in Task Scheduler. For example, you could configure it to execute at 1am each morning. For an example of configuring a scheduled task in the Task Scheduler, see Daily Trigger Example (XML) (Microsoft help).

Additional Information about MySQL cleanup.sh script

The MySQL cleanup.sh sample script has the following placeholder values that must be changed prior to implementing the script as a cron job:

-

-u xxx

Change xxx to the username for the database you want to access.

-

-pxxx

Change xxx to the password for the database you want to access.

Note: There is no space after -p and the password must be typed directly after the -p with no space in between.

-

dbname

Change dbname to the schema you will be modifying with this script.

Partitioning large data stores

When the amount of data in the MO_USAGE_DATA, MO_USAGEMSGS and MO_USAGE_NEXTHOP tables climbs over 100 GB of data, the deletion of data takes too long and locks up the tables for concurrent write operations. To overcome this, it is highly recommended that you implement a partitioning strategy that allows you to simply drop an entire partition without incurring any performance overhead.

Partitioning is fairly complex. As a result, we recommend that you test this thoroughly before attempting it on a production system.

Note: If the tables contain a large amount of data, the process of partitioning will be extremely time and resource intensive and will be virtually impossible to perform under load. Refer to the next section for the correct approach in these circumstances.

This section includes partitioning information for:

MySQL

Drop existing foreign keys, modify, and add new indexes

Partitioning in MySQL does not support foreign key relationships. In addition, the partition key must be added to the primary key index:

ALTER TABLE MO_USAGE_NEXTHOP DROP FOREIGN KEY FK_NXTHOP_EVENTID, DROP PRIMARY KEY, ADD PRIMARY KEY (NEXTHOPID, REQUESTDTS); ALTER TABLE MO_USAGEMSGS DROP PRIMARY KEY, ADD PRIMARY KEY (EVENTID,SEQ,MSGCAPTUREDDTS), DROP FOREIGN KEY FK_USG_EVENTID; ALTER TABLE MO_USAGEDATA DROP PRIMARY KEY, ADD PRIMARY KEY (USAGEDATAID, REQUESTDTS), DROP INDEX UI_USG_EVENTID, ADD INDEX NUI_USG_EVENTID(EVENTID), ADD INDEX NUI_USG_USAGEDATAID(USAGEDATAID);

Create partitions

You then create partitions, with the idea that each partition represents the deletion interval. In this example, you are deleting data once a week and keeping a maximum of 8 weeks of data. The dates must also be changed to match the current time. The names of the partitions are arbitrary, but are used by the cleanup script.

ALTER TABLE MO_USAGE_NEXTHOP

PARTITION BY RANGE (TO_DAYS(REQUESTDTS)) (

PARTITION p0000 VALUES LESS THAN (TO_DAYS('2015-01-04 00:00:00')),

PARTITION p0111 VALUES LESS THAN (TO_DAYS('2015-01-11 00:00:00')),

PARTITION p0118 VALUES LESS THAN (TO_DAYS('2015-01-18 00:00:00')),

PARTITION p0125 VALUES LESS THAN (TO_DAYS('2015-01-25 00:00:00')),

PARTITION p0201 VALUES LESS THAN (TO_DAYS('2015-02-01 00:00:00')),

PARTITION p0208 VALUES LESS THAN (TO_DAYS('2015-02-08 00:00:00')),

PARTITION p0215 VALUES LESS THAN (TO_DAYS('2015-02-15 00:00:00')),

PARTITION p0222 VALUES LESS THAN (TO_DAYS('2015-02-22 00:00:00')),

PARTITION future VALUES LESS THAN MAXVALUE

);

ALTER TABLE MO_USAGEMSGS

PARTITION BY RANGE (TO_DAYS(MSGCAPTUREDDTS)) (

PARTITION p0000 VALUES LESS THAN (TO_DAYS('2015-01-04 00:00:00')),

PARTITION p0111 VALUES LESS THAN (TO_DAYS('2015-01-11 00:00:00')),

PARTITION p0118 VALUES LESS THAN (TO_DAYS('2015-01-18 00:00:00')),

PARTITION p0125 VALUES LESS THAN (TO_DAYS('2015-01-25 00:00:00')),

PARTITION p0201 VALUES LESS THAN (TO_DAYS('2015-02-01 00:00:00')),

PARTITION p0208 VALUES LESS THAN (TO_DAYS('2015-02-08 00:00:00')),

PARTITION p0215 VALUES LESS THAN (TO_DAYS('2015-02-15 00:00:00')),

PARTITION p0222 VALUES LESS THAN (TO_DAYS('2015-02-22 00:00:00')),

PARTITION future VALUES LESS THAN MAXVALUE

);

ALTER TABLE MO_USAGEDATA

PARTITION BY RANGE (TO_DAYS(REQUESTDTS)) (

PARTITION p0000 VALUES LESS THAN (TO_DAYS('2015-01-04 00:00:00')),

PARTITION p0111 VALUES LESS THAN (TO_DAYS('2015-01-11 00:00:00')),

PARTITION p0118 VALUES LESS THAN (TO_DAYS('2015-01-18 00:00:00')),

PARTITION p0125 VALUES LESS THAN (TO_DAYS('2015-01-25 00:00:00')),

PARTITION p0201 VALUES LESS THAN (TO_DAYS('2015-02-01 00:00:00')),

PARTITION p0208 VALUES LESS THAN (TO_DAYS('2015-02-08 00:00:00')),

PARTITION p0215 VALUES LESS THAN (TO_DAYS('2015-02-15 00:00:00')),

PARTITION p0222 VALUES LESS THAN (TO_DAYS('2015-02-22 00:00:00')),

PARTITION future VALUES LESS THAN MAXVALUE

);

Oracle

Oracle does not permit the ALTER-ing of tables to add partitions. This means that new tables need to be created and the data migrated over. The steps are:

Step 1: Create new tables

Create new, partitioned tables with a _NEW suffix. Note that the 'PARTITION BY RANGE (REQUESTDTS) INTERVAL' clause will result in new partitions being created automatically.

Note: The values below are updated for version 8.0x and later.

CREATE TABLE MO_USAGE_NEXTHOP_NEW (

NEXTHOPID number(38,0) NOT NULL,

EVENTID varchar2(41) NOT NULL,

URL varchar2(4000) NULL,

REQUESTDTS date NOT NULL,

CREATEDTS date NOT NULL,

RESPTIME number(38,0) NULL

,CONSTRAINT MO_USG_NEXTHOP_BAK_PK primary key (NEXTHOPID,REQUESTDTS))

PARTITION BY RANGE (REQUESTDTS) INTERVAL (NUMTODSINTERVAL(7,'day'))

( PARTITION p0 VALUES LESS THAN (to_date('01-JUN-2015','DD-MON-YYYY'))

);

CREATE TABLE MO_USAGEMSGS_NEW (

EVENTID varchar2(41) NOT NULL,

SEQ number(38,0) NOT NULL,

MSGNAME varchar2(64) NOT NULL,

MSGCAPTUREDDTS date NOT NULL,

MSGCAPTUREDMILLIS number(38,0) NOT NULL,

MESSAGE clob NOT NULL,

TYPE varchar2(10) NOT NULL,

ISCOMPLETEMESSAGE char(1) NOT NULL,

TRANSPORTHEADERS varchar2(2048) NULL,

CONSTRAINT MO_USAGEMSGS_BAK_PK primary key (EVENTID,SEQ, MSGCAPTUREDDTS))

PARTITION BY RANGE (MSGCAPTUREDDTS) INTERVAL (NUMTODSINTERVAL(7,'day'))

( PARTITION p0 VALUES LESS THAN (to_date('01-JUN-2015','DD-MON-YYYY'))

);

CREATE TABLE MO_USAGEDATA_NEW (

USAGEDATAID number(38,0) NOT NULL,

EVENTID varchar2(41) NOT NULL,

PARENTEVENTID varchar2(41) NULL,

CLIENTHOST varchar2(255) NULL,

MPNAME varchar2(64) NOT NULL,

OPERATIONID number(38,0) NOT NULL,

SERVICEID number(38,0) NOT NULL,

ORGID number(38,0) DEFAULT 0 NULL,

CONTRACTID number(38,0) NOT NULL,

BINDTEMPLATEID number(38,0) NOT NULL,

REQUSERNAME varchar2(128) NULL,

REQUESTDTS date NOT NULL,

REQUESTMILLIS number(38,0) NOT NULL,

RESPONSETIME number(38,0) NOT NULL,

REQMSGSIZE number(38,0) NOT NULL,

NMREQMSGSIZE number(38,0) NULL,

RESPMSGSIZE number(38,0) NULL,

NMRESPMSGSIZE number(38,0) NULL,

ERRCATEGORY number(38,0) NULL,

ERRMESSAGE varchar2(512) NULL,

ERRDETAILS varchar2(1024) NULL,

CREATEDTS date NOT NULL,

CREATEDMILLIS number(38,0) NOT NULL,

NEXTHOPURL varchar2(4000) NULL,

ISSOAPFLTBYMP number(38,0) NOT NULL,

ISSOAPFLTBYNEXTHOP number(38,0) NOT NULL,

LISTENERURL varchar2(4000) NULL,

NEXTHOPRESPTIME number(38,0) NULL,

APPUSERNAME varchar2(128) NULL,

OTHERUSERNAMES varchar2(512) NULL,

CUSTOMFIELD1 varchar2(256) NULL,

VERB varchar2(8) NULL,

STATUS_CODE varchar2(8) NULL,

CLIENTID number(38,0) DEFAULT 0 NOT NULL,

CONSTRAINT MO_USAGEDATA_PK primary key (USAGEDATAID))

PARTITION BY RANGE (REQUESTDTS) INTERVAL (NUMTODSINTERVAL(7,'day'))

( PARTITION p0 VALUES LESS THAN (to_date('01-JUN-2015','DD-MON-YYYY'))

);

CREATE INDEX MO_USAGEDATA_PK1 ON MO_USAGEDATA_NEW(REQUESTDTS DESC,REQUESTMILLIS DESC);

CREATE INDEX MO_USAGEDATA_PK2 ON MO_USAGEDATA_NEW(OPERATIONID);

CREATE INDEX MO_USAGEDATA_PK3 ON MO_USAGEDATA_NEW(CONTRACTID);

CREATE INDEX MO_USAGEDARA_IX1 ON MO_USAGEDATA_NEW(SERVICEID);

Note: Table definitions might change based on product version. You should check to make sure that the definition above matches your table structure and alter it as necessary.

Step 2: Switch the new tables with the old tables

Rename the existing live tables to *_BCK and replace them with the new, empty tables:

RENAME TABLE MO_USAGEMSGS TO MO_USAGEMSGS_BAK; RENAME TABLE MO_USAGEMSGS_NEW TO MO_USAGEMSGS; RENAME TABLE MO_USAGEDATA TO MO_USAGEDATA_BAK; RENAME TABLE MO_USAGEDATA_NEW TO MO_USAGEDATA; RENAME TABLE MO_USAGE_NEXTHOP TO MO_USAGE_NEXTHOP_BAK; RENAME TABLE MO_USAGE_NEXTHOP_NEW TO MO_USAGE_NEXTHOP;

Step 3: Merge data

Due to the fact that you created a set of new tables, you need to merge the data from the old tables into the new partitioned tables so that it is not lost.

INSERT INTO MO_USAGE_NEXTHOP SELECT * FROM MO_USAGE_NEXTHOP_BAK; INSERT INTO MO_USAGEDATA SELECT * FROM MO_USAGEDATA_BAK; INSERT INTO MO_USAGEMSGS SELECT * FROM MO_USAGEMSGS_BAK;

These scripts would be called several times with different, incremental values of X and Y where X and Y represents a small time interval such as 6 hours. This keeps the merging of data discrete and less error-prone.

You also might want to call this from a shell script to automate the process—see the sample script merge.sh in sample_scripts.zip.

Note: Table definitions might change based on product version. You should check to make sure that the definition above matches your table structure and alter it as necessary.

Partitioning large data stores under load (MySQL Only)

If the usage tables contain a large amount of data you will need to rename, rotate and transfer data from the existing tables to a new set of partitioned tables. For Oracle, the steps are exactly the same as shown in the previous section. For MySQL, it is quicker to partition the tables with an ALTER statement and merge a much smaller dataset. This is shown below. The steps are:

- Step 1: Create new tables (MySQL only)

- Step 2: Switch the new tables with the old tables (MySQL only)

- Step 3: Drop existing foreign keys and add new indexes (MySQL only)

- Step 4: Create partitions (MySQL only)

- Step 5: Switch the partitioned tables back (MySQL only)

- Step 6: Merge data (MySQL only)

Step 1: Create new tables (MySQL only)

First, create new tables that will temporarily support the live system. These do not have to be partitioned as they will only support a small amount of data:

CREATE TABLE MO_USAGEMSGS_NEW ( EVENTID VARCHAR(41) NOT NULL, SEQ INT NOT NULL, MSGNAME VARCHAR(64) NOT NULL, MSGCAPTUREDDTS TIMESTAMP DEFAULT 0 NOT NULL, MSGCAPTUREDMILLIS INT NOT NULL, MESSAGE LONGTEXT NOT NULL, TYPE VARCHAR(10) NOT NULL, ISCOMPLETEMESSAGE CHAR(1) NOT NULL, TRANSPORTHEADERS VARCHAR(2048) NULL. CONSTRAINT MO_USAGEMSGS_PK primary key (EVENTID,SEQ) ) ROW_FORMAT=DYNAMIC Engine=InnoDB; CREATE INDEX NUI_MO_USGMSGS1 ON MO_USAGEMSGS_NEW(MSGCAPTUREDDTS); CREATE INDEX NUI_MO_USGMSGS2 ON MO_USAGEMSGS_NEW(MSGCAPTUREDDTS, MSGCAPTUREDMILLIS); CREATE TABLE MO_USAGEDATA_NEW ( USAGEDATAID BIGINT AUTO_INCREMENT NOT NULL, EVENTID VARCHAR(41) NOT NULL, PARENTEVENTID VARCHAR(41) NULL, CLIENTHOST VARCHAR(255) NULL, MPNAME VARCHAR(64) NOT NULL, OPERATIONID INT NOT NULL, SERVICEID INT NOT NULL, ORGID INT NULL DEFAULT 0, CONTRACTID INT NOT NULL, BINDTEMPLATEID INT NOT NULL, REQUSERNAME VARCHAR(128) NULL, REQUESTDTS TIMESTAMP DEFAULT 0 NOT NULL, REQUESTMILLIS INT NOT NULL, RESPONSETIME INT NOT NULL, REQMSGSIZE INT NOT NULL, NMREQMSGSIZE INT NULL, RESPMSGSIZE INT NULL, NMRESPMSGSIZE INT NULL, ERRCATEGORY INT NULL, ERRMESSAGE VARCHAR(512) NULL, ERRDETAILS VARCHAR(1024) NULL, CREATEDTS TIMESTAMP DEFAULT 0 NOT NULL, CREATEDMILLIS INT NOT NULL, NEXTHOPURL VARCHAR(4000) NULL, ISSOAPFLTBYMP INT NOT NULL, ISSOAPFLTBYNEXTHOP INT NOT NULL, LISTENERURL VARCHAR(4000) NULL, NEXTHOPRESPTIME INT NULL, APPUSERNAME VARCHAR(128) NULL, OTHERUSERNAMES VARCHAR(512) NULL, CUSTOMFIELD1 VARCHAR(256) NULL, VERB VARCHAR(8) NULL, STATUS_CODE VARCHAR(8) NULL, CLIENTID number(38,0) DEFAULT 0 NOT NULL, CONSTRAINT MO_USAGEDATA_PK primary key (USAGEDATAID) ) ROW_FORMAT=DYNAMIC Engine=InnoDB; CREATE INDEX MO_USAGEDATA_PK1 ON MO_USAGEDATA_NEW(REQUESTDTS DESC,REQUESTMILLIS DESC); CREATE INDEX MO_USAGEDATA_PK2 ON MO_USAGEDATA_NEW(OPERATIONID); CREATE INDEX MO_USAGEDATA_PK3 ON MO_USAGEDATA_NEW(CONTRACTID); CREATE INDEX MO_USAGEDARA_IX1 ON MO_USAGEDATA_NEW(SERVICEID); CREATE TABLE MO_USAGE_NEXTHOP_NEW ( NEXTHOPID BIGINT AUTO_INCREMENT NOT NULL, EVENTID VARCHAR(41) NOT NULL, URL VARCHAR(4000) NULL, REQUESTDTS TIMESTAMP DEFAULT 0 NOT NULL, CREATEDTS TIMESTAMP DEFAULT 0 NOT NULL, RESPTIME BIGINT NULL, CONSTRAINT MO_USG_NEXTHOP_PK primary key (NEXTHOPID) ) ROW_FORMAT=DYNAMIC Engine=InnoDB;

Note: Table definitions might change based on product version. You should check to make sure that the definition above matches your table structure and alter it as necessary.

Step 2: Switch the new tables with the old tables (MySQL only)

Rename the existing live tables to *_BCK and replace them with the new, empty tables:

RENAME TABLE MO_USAGEMSGS TO MO_USAGEMSGS_BCK, MO_USAGEMSGS_NEW TO MO_USAGEMSGS; RENAME TABLE MO_USAGEDATA TO MO_USAGEDATA_BCK, MO_USAGEDATA_NEW TO MO_USAGEDATA; RENAME TABLE MO_USAGE_NEXTHOP TO MO_USAGE_NEXTHOP_BCK, MO_USAGE_NEXTHOP_NEW TO MO_USAGE_NEXTHOP;

Step 3: Drop existing foreign keys and add new indexes (MySQL only)

Alter the tables as shown in the previous section, but this time against the *_BCK tables.

Note: This might take several hours.

ALTER TABLE MO_USAGE_NEXTHOP_BCK DROP FOREIGN KEY FK_NXTHOP_EVENTID, DROP PRIMARY KEY, ADD PRIMARY KEY (NEXTHOPID, REQUESTDTS); ALTER TABLE MO_USAGEMSGS_BCK DROP PRIMARY KEY, ADD PRIMARY KEY (EVENTID,SEQ,MSGCAPTUREDDTS), DROP FOREIGN KEY FK_USG_EVENTID; ALTER TABLE MO_USAGEDATA_BCK DROP PRIMARY KEY, ADD PRIMARY KEY (USAGEDATAID, REQUESTDTS), DROP INDEX UI_USG_EVENTID, ADD INDEX NUI_USG_EVENTID(EVENTID), ADD INDEX NUI_USG_USAGEDATAID(USAGEDATAID);

Step 4: Create partitions (MySQL only)

You then create partitions as shown in the previous section, this time against the *_BCK tables. The dates and intervals would be changed to suit your system.

Note: This might take several hours.

ALTER TABLE MO_USAGE_NEXTHOP_BCK

PARTITION BY RANGE (TO_DAYS(REQUESTDTS)) (

PARTITION p0000 VALUES LESS THAN (TO_DAYS('2015-01-04 00:00:00')),

PARTITION p0111 VALUES LESS THAN (TO_DAYS('2015-01-11 00:00:00')),

PARTITION p0118 VALUES LESS THAN (TO_DAYS('2015-01-18 00:00:00')),

PARTITION p0125 VALUES LESS THAN (TO_DAYS('2015-01-25 00:00:00')),

PARTITION p0201 VALUES LESS THAN (TO_DAYS('2015-02-01 00:00:00')),

PARTITION p0208 VALUES LESS THAN (TO_DAYS('2015-02-08 00:00:00')),

PARTITION p0215 VALUES LESS THAN (TO_DAYS('2015-02-15 00:00:00')),

PARTITION p0222 VALUES LESS THAN (TO_DAYS('2015-02-22 00:00:00')),

PARTITION future VALUES LESS THAN MAXVALUE

);

ALTER TABLE MO_USAGEMSGS_BCK

PARTITION BY RANGE (TO_DAYS(MSGCAPTUREDDTS)) (

PARTITION p0000 VALUES LESS THAN (TO_DAYS('2015-01-04 00:00:00')),

PARTITION p0111 VALUES LESS THAN (TO_DAYS('2015-01-11 00:00:00')),

PARTITION p0118 VALUES LESS THAN (TO_DAYS('2015-01-18 00:00:00')),

PARTITION p0125 VALUES LESS THAN (TO_DAYS('2015-01-25 00:00:00')),

PARTITION p0201 VALUES LESS THAN (TO_DAYS('2015-02-01 00:00:00')),

PARTITION p0208 VALUES LESS THAN (TO_DAYS('2015-02-08 00:00:00')),

PARTITION p0215 VALUES LESS THAN (TO_DAYS('2015-02-15 00:00:00')),

PARTITION p0222 VALUES LESS THAN (TO_DAYS('2015-02-22 00:00:00')),

PARTITION future VALUES LESS THAN MAXVALUE

);

ALTER TABLE MO_USAGEDATA_BCK

PARTITION BY RANGE (TO_DAYS(REQUESTDTS)) (

PARTITION p0000 VALUES LESS THAN (TO_DAYS('2015-01-04 00:00:00')),

PARTITION p0111 VALUES LESS THAN (TO_DAYS('2015-01-11 00:00:00')),

PARTITION p0118 VALUES LESS THAN (TO_DAYS('2015-01-18 00:00:00')),

PARTITION p0125 VALUES LESS THAN (TO_DAYS('2015-01-25 00:00:00')),

PARTITION p0201 VALUES LESS THAN (TO_DAYS('2015-02-01 00:00:00')),

PARTITION p0208 VALUES LESS THAN (TO_DAYS('2015-02-08 00:00:00')),

PARTITION p0215 VALUES LESS THAN (TO_DAYS('2015-02-15 00:00:00')),

PARTITION p0222 VALUES LESS THAN (TO_DAYS('2015-02-22 00:00:00')),

PARTITION future VALUES LESS THAN MAXVALUE

);

Step 5: Switch the partitioned tables back (MySQL only)

Now switch the partitioned tables (*_BCK) with the new tables you created in Step 1. This will return the original data tables to live.

RENAME TABLE MO_USAGEDATA TO MO_USAGEDATA2, MO_USAGEDATA_BCK TO MO_USAGEDATA; RENAME TABLE MO_USAGEMSGS TO MO_USAGEMSGS2, MO_USAGEMSGS_BCK TO MO_USAGEMSGS; RENAME TABLE MO_USAGE_NEXTHOP TO MO_USAGE_NEXTHOP2, MO_USAGE_NEXTHOP_BCK TO MO_USAGE_NEXTHOP;

Step 6: Merge data (MySQL only)

Due to the fact that you created a set of new tables to temporarily store live data while the partitioning was done, you need to merge the data from those tables back into the live tables so that it is not lost.

INSERT INTO MO_USAGE_NEXTHOP (EVENTID, URL, REQUESTDTS, CREATEDTS, RESPTIME) SELECT EVENTID, URL, REQUESTDTS, CREATEDTS, RESPTIME FROM MO_USAGE_NEXTHOP_BCK where REQUESTDTS between X and Y; INSERT INTO MO_USAGEDATA (EVENTID PARENTEVENTID CLIENTHOST MPNAME OPERATIONID SERVICEID ORGID CONTRACTID BINDTEMPLATEID REQUSERNAME REQUESTDTS REQUESTMILLIS RESPONSETIME REQMSGSIZE NMREQMSGSIZE RESPMSGSIZE NMRESPMSGSIZE ERRCATEGORY ERRMESSAGE ERRDETAILS CREATEDTS CREATEDMILLIS NEXTHOPURL ISSOAPFLTBYMP ISSOAPFLTBYNEXTHOP LISTENERURL NEXTHOPRESPTIME APPUSERNAME OTHERUSERNAMES CUSTOMFIELD1 VERB STATUS_CODE CLIENTID) SELECT EVENTID PARENTEVENTID CLIENTHOST MPNAME OPERATIONID SERVICEID ORGID CONTRACTID BINDTEMPLATEID REQUSERNAME REQUESTDTS REQUESTMILLIS RESPONSETIME REQMSGSIZE NMREQMSGSIZE RESPMSGSIZE NMRESPMSGSIZE ERRCATEGORY ERRMESSAGE ERRDETAILS CREATEDTS CREATEDMILLIS NEXTHOPURL ISSOAPFLTBYMP ISSOAPFLTBYNEXTHOP LISTENERURL NEXTHOPRESPTIME APPUSERNAME OTHERUSERNAMES CUSTOMFIELD1 VERB STATUS_CODE CLIENTID FROM MO_USAGEDATA_BCK where REQUESTDTS between X and Y; INSERT INTO MO_USAGEMSGS SELECT * FROM MO_USAGEMSGS_BCK where MSGCAPTUREDDTS between X and Y;

Note: Table definitions might change based on product version. You should check to make sure that the definition above matches your table structure and alter it as necessary.

These scripts would be called several times with different, incremental values of X and Y where X and Y represents a small time interval such as 6 hours. This keeps the merging of data discrete and less error-prone.

You also might want to call this from a shell script to automate the process—see the sample script merge.sh in sample_scripts.zip.

Dropping partitions

The benefit of partitioning your data is that you can delete old data by simply dropping a partition. This section describes how to drop partitions for:

MySQL

For MySQL, you will need to create new partitions and drop old partitions each week. For sample scripts on MySQL, download the following zip archive: sample_scripts.zip.

Once satisfied with the scripts, you can set up a cron job to execute it each night. For example, you could configure cron to execute on Sunday morning at 1am as follows:

0 1 * * 0 /xxx/bin/partition.sh

Oracle

For Oracle, the tables will automatically create new partitions each week.

For sample procedures to drop the partitions, download the following zip archive: sample_scripts.zip

MongoDB maintenance

OAuth grants and tokens are cleaned up by the scheduled jobs, which are enabled by default. No maintenance is needed for them, unless you choose to disable them and run external database maintenance instead (see, OAuth grants and tokens). All other Mongo collections depend on the TTL indexes.

This section includes information, suggestions, and/or examples for cleaning up the following:

OAuth grants and tokens

OAuth grants and tokens are cleaned up by the scheduled Quartz jobs, which are enabled by default, so no external maintenance is necessary. The jobs are:

- ServiceManager:cleangrants.job

- ServiceManager:cleantokens.oauth.job

These jobs clean up both MongoDB and RDBMS, depending on the value for OAuth in this setting:

Akana Administration Console > com.soa.oauth.provider.server > com.soa.oauth.provider.server.config.datasource

-

Additionally, you can enable the following features for scheduled jobs on MongoDB and RDBMS to remove expired OAuth grants and tokens.

Features for Scheduled Jobs Container using MongoDB:

"com.soa.feature.subsystems", "com.soa.atmosphere.feature", "com.akana.oauth.plugin", "com.soa.oauth.feature", "com.soa.persistence.plugin"

Features for Scheduled Jobs Container using RDBMS:

"com.soa.feature.subsystems", "com.soa.atmosphere.feature", "com.akana.oauth.plugin", "com.soa.oauth.feature",

Cleaning up OAuth grants and tokens externally

If you choose, you can disable the scheduled jobs from the Akana Administration Console and run one or more scripts directly on the RDBMS from outside, in place of the built-in scheduled jobs to clean up ROLLUPs etc. An example is shown below. The script below is run against the MySQL database via shell scripts via cronjobs.

Note: This is just an example. This script is not part of the product and is not supported.

$ cat saas_db_cleanup.sh

DB_HOST="somemysql.host.com"

cur_time='mysql -u somemysqluser -h $DB_HOST -D open -NB -e "select TIMESTAMPADD(MONTH, -1, now())"'

max_id='mysql -u somemysqluser -h $DB_HOST -D open -NB -e "select min(MAX_ID) from MO_STATUS"'

rows='mysql -u somemysqluser -h $DB_HOST -D open -NB -e "select count(*) from MO_ROLLUPDATA where ROLLUPDATAID < $max_id and INTVLSTARTDTS < '${cur_time}'"'

while [ $rows -gt 0 ]

do

date

mysql -u somemysqluser -h $DB_HOST -D open -e "delete from MO_ROLLUPDATA where ROLLUPDATAID < $max_id and INTVLSTARTDTS < '${cur_time}' LIMIT 1000000"

rows='mysql -u somemysqluser -h $DB_HOST -D open -NB -e "select count(*) from MO_ROLLUPDATA where ROLLUPDATAID < $max_id and INTVLSTARTDTS < '${cur_time}'"'

done

date

mysql -u somemysqluser -h $DB_HOST -D open -e "delete from MO_ROLLUP15 where INTVLSTARTDTS < TIMESTAMPADD(MONTH, -1, now());"

date

mysql -u somemysqluser -h $DB_HOST -D open -e "delete from MO_ROLL_ORG15 where INTVLSTARTDTS < TIMESTAMPADD(MONTH, -1, now());"

date

mysql -u somemysqluser -h $DB_HOST -D open -e "delete from MO_ROLLUP_HOUR where INTVLSTARTDTS < TIMESTAMPADD(MONTH, -3, now());"

date

mysql -u somemysqluser -h $DB_HOST -D open -e "delete from MO_ROLL_ORG_H where INTVLSTARTDTS < TIMESTAMPADD(MONTH, -3, now());"

date

mysql -u somemysqluser -h $DB_HOST -D open -e "delete from MO_ROLLUP_DAY where INTVLSTARTDTS < TIMESTAMPADD(YEAR, -1, now());"

date

mysql -u somemysqluser -h $DB_HOST -D open -e "delete from MO_ROLL_ORG_D where INTVLSTARTDTS < TIMESTAMPADD(YEAR, -1, now());"

date

mysql -u somemysqluser -h $DB_HOST -D open -e "delete s from AM_ALERTS a inner join AM_ALERTS_SLAS s on s.ALERTSID = a.ALERTSID where a.SOURCEDTS < TIMESTAMPADD(MONTH, -1, now());"

date

mysql -u somemysqluser -h $DB_HOST -D open -e "delete from AM_ALERTS where SOURCEDTS < TIMESTAMPADD(MONTH, -1, now());"

date

mysql -u somemysqluser -h $DB_HOST -D open -e "delete from AM_AUDITTRAIL where CREATEDTS < TIMESTAMPADD(YEAR, -1, now());"

date

mysql -u somemysqluser -h $DB_HOST -D open -e "delete from PM_AUDITTRAIL where DTSCREATE < TIMESTAMPADD(YEAR, -1, now());"

date

mysql -u somemysqluser -h $DB_HOST -D open -e "delete from API_CONTRACT_SLA_DATA where INTVLSTARTDTS < TIMESTAMPADD(YEAR, -1, now());"

date

Policy Manager database

The example below creates a TTL index in MongoDB for the Policy Manager database.

db.ALERTS.createIndex({ "generatedAt": 1 },{ expireAfterSeconds: 31536000, "name" : "ALERTS_Clnp", background: true})

db.ALERT_AUDIT_TRAIL.createIndex({ "createDate": 1 },{ expireAfterSeconds: 31536000, "name" : "ALERT_AUDIT_TRAIL_Clnp", background: true})

db.SECURITY_AUDIT_TRAIL.createIndex({ "createDate": 1 },{ expireAfterSeconds: 31536000, "name" : "SECURITY_AUDIT_TRAIL_Clnp", background: true})

PM_AUDIT database

You can set up the Audit logs to have a TTL index that controls how long the data is kept. The example below sets the logs to expire after 30 days.

{

"v" : 1,

"key" :

{ "requestDtsCal" : 1 }

,

"name" : "AUDIT_Clnp",

"ns" : "PM_AUDIT.AUDIT",

"expireAfterSeconds" : 2592000,

"background" : true

}

The example below creates a TTL index in MongoDB for the PM_AUDIT database.

db.AUDIT.createIndex({ "requestDtsCal": 1 },{ expireAfterSeconds: 4838400, "name" : "AUDIT_Clnp", background: true })

db.TRANSACTION_METRIC.createIndex({ "createdDate": 1 },{ expireAfterSeconds: 4838400, "name" : "TRANSACTION_METRIC", background: true })

METRIC_ROLLUP_DATA

The example below creates a TTL index on METRIC_ROLLUP_DATA.

Note: You can also configure purge intervals for operational metric rollup data cleanup, which is more efficient. See, MongoDB: metric rollup data cleanup below.

db.OPERATIONAL_METRIC.createIndex({ "value.timestamp": 1 },{ expireAfterSeconds: 31536000, "name" : "OPERATIONAL_METRIC_Clnp", background: true })

Recommended MongoDB indexes

If you are using the Tenant Org metrics, use the search index below.

Note: If you already have search indexes in place, check against your current indexes before creating the below so you don't end up with duplicates.

use METRIC_ROLLUP_DATA

db.OPERATIONAL_METRIC.createIndex(

{

"value._rolluptype" : 1,

"value.hierarchy.organization.id" : 1,

"value.timestamp" : 1 },

{ name: "OPERATIONAL_METRIC_ORG_8AIdx", background : true}

)

rs1:PRIMARY> var exp = db.OPERATIONAL_METRIC.explain("executionStats")

rs1:PRIMARY> exp.find({ "$and" : [ { "value._rolluptype": "DAYS" }, { "value._timezones": "GMT" }, { "value.hierarchy.organization.id": 16764 }, { "value.timestamp": { $gte: new Date(1548979200000) }} ]})

{

"queryPlanner" : {

"plannerVersion" : 1,

"namespace" : "METRIC_ROLLUP_DATA.OPERATIONAL_METRIC",

"indexFilterSet" : false,

"parsedQuery" : {

"$and" : [

{

"value._rolluptype" : {

"$eq" : "DAYS"

}

},

{

"value._timezones" : {

"$eq" : "GMT"

}

},

{

"value.hierarchy.organization.id" : {

"$eq" : 16764

}

},

{

"value.timestamp" : {

"$gte" : ISODate("2019-02-01T00:00:00Z")

}

}

]

},

"winningPlan" : {

"stage" : "FETCH",

"filter" : {

"value._timezones" : {

"$eq" : "GMT"

}

},

"inputStage" : {

"stage" : "IXSCAN",

"keyPattern" : {

"value._rolluptype" : 1,

"value.hierarchy.organization.id" : 1,

"value.timestamp" : 1

},

"indexName" : "OPERATIONAL_METRIC_ORG_8AIdx",

"isMultiKey" : true,

"multiKeyPaths" : {

"value._rolluptype" : [ ],

"value.hierarchy.organization.id" : [

"value.hierarchy"

],

"value.timestamp" : [ ]

},

"isUnique" : false,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : 2,

"direction" : "forward",

"indexBounds" : {

"value._rolluptype" : [

"[\"DAYS\", \"DAYS\"]"

],

"value.hierarchy.organization.id" : [

"[16764.0, 16764.0]"

],

"value.timestamp" : [

"[new Date(1548979200000), new Date(9223372036854775807)]"

]

}

}

},

"rejectedPlans" : [

{

"stage" : "FETCH",

"filter" : {

"$and" : [

{

"value._timezones" : {

"$eq" : "GMT"

}

},

{

"value.hierarchy.organization.id" : {

"$eq" : 16764

}

}

]

},

"inputStage" : {

"stage" : "IXSCAN",

"keyPattern" : {

"value._rolluptype" : 1,

"value.contract.id" : 1,

"value.timestamp" : 1

},

"indexName" : "OPERATIONAL_METRIC_RLP_6AIdx",

"isMultiKey" : false,

"multiKeyPaths" : {

"value._rolluptype" : [ ],

"value.contract.id" : [ ],

"value.timestamp" : [ ]

},

"isUnique" : false,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : 2,

"direction" : "forward",

"indexBounds" : {

"value._rolluptype" : [

"[\"DAYS\", \"DAYS\"]"

],

"value.contract.id" : [

"[MinKey, MaxKey]"

],

"value.timestamp" : [

"[new Date(1548979200000), new Date(9223372036854775807)]"

]

}

}

},

{

"stage" : "FETCH",

"filter" : {

"value._timezones" : {

"$eq" : "GMT"

}

},

"inputStage" : {

"stage" : "IXSCAN",

"keyPattern" : {

"value._rolluptype" : 1,

"value.hierarchy.organization.id" : 1,

"value.service.id" : 1,

"value.operation.id" : 1,

"value.timestamp" : 1

},

"indexName" : "OPERATIONAL_METRIC_RLP_1AIdx",

"isMultiKey" : true,

"multiKeyPaths" : {

"value._rolluptype" : [ ],

"value.hierarchy.organization.id" : [

"value.hierarchy"

],

"value.service.id" : [ ],

"value.operation.id" : [ ],

"value.timestamp" : [ ]

},

"isUnique" : false,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : 2,

"direction" : "forward",

"indexBounds" : {

"value._rolluptype" : [

"[\"DAYS\", \"DAYS\"]"

],

"value.hierarchy.organization.id" : [

"[16764.0, 16764.0]"

],

"value.service.id" : [

"[MinKey, MaxKey]"

],

"value.operation.id" : [

"[MinKey, MaxKey]"

],

"value.timestamp" : [

"[new Date(1548979200000), new Date(9223372036854775807)]"

]

}

}

}

]

},

"executionStats" : {

"executionSuccess" : true,

"nReturned" : 0,

"executionTimeMillis" : 1,

"totalKeysExamined" : 0,

"totalDocsExamined" : 0,

"executionStages" : {

"stage" : "FETCH",

"filter" : {

"value._timezones" : {

"$eq" : "GMT"

}

},

"nReturned" : 0,

"executionTimeMillisEstimate" : 0,

"works" : 2,

"advanced" : 0,

"needTime" : 0,

"needYield" : 0,

"saveState" : 0,

"restoreState" : 0,

"isEOF" : 1,

"invalidates" : 0,

"docsExamined" : 0,

"alreadyHasObj" : 0,

"inputStage" : {

"stage" : "IXSCAN",

"nReturned" : 0,

"executionTimeMillisEstimate" : 0,

"works" : 1,

"advanced" : 0,

"needTime" : 0,

"needYield" : 0,

"saveState" : 0,

"restoreState" : 0,

"isEOF" : 1,

"invalidates" : 0,

"keyPattern" : {

"value._rolluptype" : 1,

"value.hierarchy.organization.id" : 1,

"value.timestamp" : 1

},

"indexName" : "OPERATIONAL_METRIC_ORG_8AIdx",

"isMultiKey" : true,

"multiKeyPaths" : {

"value._rolluptype" : [ ],

"value.hierarchy.organization.id" : [

"value.hierarchy"

],

"value.timestamp" : [ ]

},

"isUnique" : false,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : 2,

"direction" : "forward",

"indexBounds" : {

"value._rolluptype" : [

"[\"DAYS\", \"DAYS\"]"

],

"value.hierarchy.organization.id" : [

"[16764.0, 16764.0]"

],

"value.timestamp" : [

"[new Date(1548979200000), new Date(9223372036854775807)]"

]

},

"keysExamined" : 0,

"seeks" : 1,

"dupsTested" : 0,

"dupsDropped" : 0,

"seenInvalidated" : 0

}

}

},

"serverInfo" : {

"host" : "MMOHMAD10L3",

"port" : 27020,

"version" : "3.6.9",

"gitVersion" : "167861a164723168adfaaa866f310cb94010428f"

},

"ok" : 1

}

rs1:PRIMARY>

MongoDB: metric rollup data cleanup

In some cases, purge intervals may not be defined for OPERATIONAL_METRIC rollup configuration. In this scenario, rollup data is not purged by default. Data could be purged by creating a TTL index based on the timestamp property and expiring after one year. However, a year of minute data is a lot of data. It is better to be able to configure the purge value separately for each purge interval.

You can define the purge intervals for OPERATIONAL_METRIC rollups. The default value for each purge interval is shown below.

| Purge interval | Default value |

|---|---|

| MINUTES |

1 day |

| HOURS |

1 week |

| DAYS | 1 year |

| WEEKS | 1 year |

| MONTHS | 1 year |

| YEARS | 1 year |

An example of a query to purge operational metric rollup data is shown below. You can use this query to update the rollup configuration to use the default values for each purge interval. The below query updates the existing rollups with the _deleteOn timestamp value for each purge interval.

purgeInterval is an array field inside the rollup configuration collection for OPERATIONAL_METRIC. In the product, it could be empty. If it is empty, the product doesn't mark the metric rollup data with the proper _deleteOn flag. Once the purge intervals are defined, as per the below, the product will automatically start marking the data, and the TTL will take care of purging the documents with the timestamp and the _deleteOn flag.

use Akana

db.ROLLUP_CONFIGURATION.updateOne({name: "OPERATIONAL_METRIC"},

{"$set": {"purgeInterval": {

MINUTES: {

type: "DAYS",

interval: 1

},

HOURS: {

type: "WEEK",

interval: 1

},

DAYS: {

type: "YEAR",

interval: 1

},

WEEK: {

type: "YEAR",

interval: 1

},

MONTH: {

type: "YEAR",

interval: 1

},

YEAR: {

type: "YEAR",

interval: 1

}

}}});

How to add entries to the crontab to create the cron job

Use the following command to edit the cron jobs:

crontab -e

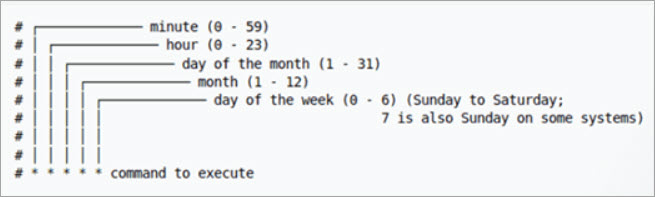

If there are already existing cron jobs present, it will open the existing crontab file. If there are no cron jobs already configured on the system, a new crontab file will be created. The following diagram illustrates the crontab entry syntax:

To add a new cron job, you need to use the crontab entry syntax to specify how often the system must execute the cron job as well as the command for the system to execute it.

How to add an email address within a cron job entry

You can specify an email address within a cron job entry in the crontab file to receive an email log each time the cron job executes. There is an option to email the log of a cron job after it executes. This log can be emailed to one or multiple users.

The following example shows the crontab entry including log email to one email address:

MAILTO=”emailaddress@example.com” 0 1 * * * /full/path/to/script.sh

The following example shows the crontab entry including log email to multiple email addresses:

MAILTO=”emailaddress1@example.com,emailaddress2@example.com” 0 1 * * * /full/path/to/script.sh

How to configure the wait_timeout and max_idle_time value

Problem: PM containers becoming slow or unresponsive, login issues with PM or admin console etc.

Cause: Available database connections from the connection pool get exhausted when inactive database connections are not cleared. Thus new database connection requests do not get resolved.

Resolution: The setting of wait_timeout, interactive_timeout, and max_idle_time parameters helps remove the inactive connections after the specified timeout intervals and supports requests for new connections.

Changing MySQL wait_timeout value

The wait_timeout is the time the server waits for an activity on a non-interactive or idle connection before closing it. Sleeping idle connections will use high server resources and result in a high server load. The interactive_timeout is the time a server waits for activity on an interactive connection before closing it. You can set the wait_timeout value for a session or globally. For a session, if you set the wait_timeout value then it will be valid for a particular session. However, if you set the wait_timeout value globally, it will be valid for all the sessions.

You must have server access to change the MySQL wait_timeout and interactive_timeout values. Take the following steps:

-

Check the existing timeout value by using the following command.

SELECT @@global.wait_timeout, @@session.wait_timeout,@@global.interactive_timeout, @@session.interactive_timeout;

-

Set session and global wait_timeout and interactive_timeout values as 300 (5 mins).

SET session wait_timeout=300; SET @@GLOBAL.wait_timeout=300; SET session interactive_timeout=300; SET @@GLOBAL.interactive_timeout=300;

If you need to change the wait_timeout global value then take the following steps:

-

Open my.cnf/mysqld.cnf file from path /etc/mysql directory.

-

Locate the timeout configuration under mysqld and add the wait_timeout and interactive_timeout values.

[mysqld] wait_timeout = 300 interactive_timeout = 300

-

Save the changes and restart the MySQL server for the changes to take effect.

Changing Oracle max_idle_time value

The max_idle_time specifies the maximum number of minutes that a session can be idle. After that point, the session is automatically terminated.

You must have server access to change the Oracle idle timeout value. Take the following steps:

-

Sign in to the Oracle database as an administrator or sysdba user.

-

Execute the following query to check the current max_idle_time, which must be 0 (no limit) by default.

show parameter max_idle_time;

-

Execute the following query to change the max_idle_time to 30 mins.

alter system set max_idle_time=30;

-

Sign out of the session and restart the Oracle database server.

-

Re-login to the Oracle database server and verify the max_idle_time is changed to 30 mins by executing the following command.

show parameter max_idle_time;

-

Execute the following query to check any inactive database connections.

SELECT sess.process, sess.status, sess.STATE, sess.username, sess.schemaname, sql.sql_text FROM v$session sess, v$sql sql WHERE sql.sql_id(+) = sess.sql_id AND sess.type = 'USER';

-

Monitor the database connections to see whether the inactive connections are removed after configuring the max_idle_time parameter by querying the process list.