Sample Deployment Scenario

Illustration and overview for a sample deployment scenario.

Table of Contents

Introduction

There are many ways you can deploy the Akana API Platform. This document provides an illustration of a clustered environment with high availability. Your deployment might vary depending on many factors, including:

- Database connectivity from the containers

- Load balancing and failover requirements

- Security and firewall restrictions

Note: It's best to consult with a member of the Akana Professional Services team to get recommendations on the ideal deployment for your requirements.

Sample deployment: clustered environment

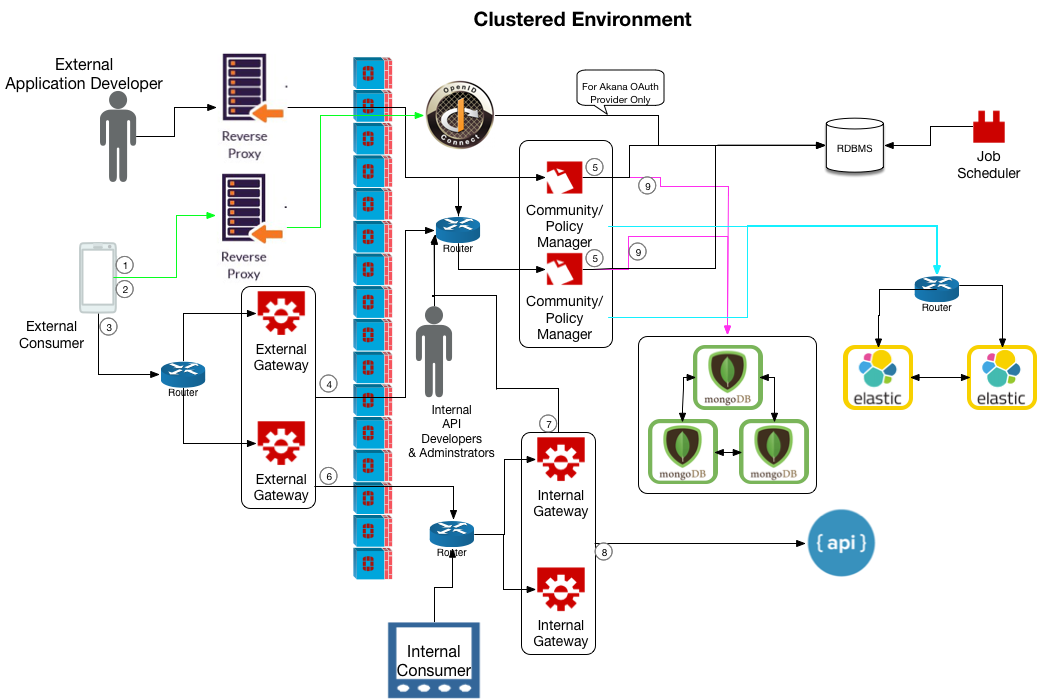

The reference diagram below illustrates an example of the architecture for a typical customer deployment with a single geographical data center (one physical location).

Legend

The numbers in the architectural reference diagram above correspond to the sequence of actions below:

- The consuming app invokes the OAuth server to receive the access token. See OAuth below.

- Authentication is validated, and then the access token is returned to the consuming app.

- The consuming app invokes an API hosted in the Akana API Gateway, accessing the external API Gateway (Network Director, ND).

- The external API Gateway, acting as the Policy Enforcement Point, reaches out to Policy Manager (PM), which is acting as the Policy Decision Point, to authorize and authenticate the consuming application. See Policy Manager and Community Manager running in the same container and API Gateway/Internal Gateway (Network Director) below (#4 and #7 show the same activity).

- The containers with Policy Manager/Community Manager installed send and/or receive data to/from the RDBMS.

- The external API Gateway calls the internal API Gateway.

- The internal API Gateway, acting as the Policy Enforcement Point, reaches out to Policy Manager (PM), which is acting as the Policy Decision Point, to authorize and authenticate the consuming application. See Policy Manager and Community Manager running in the same container and API Gateway/Internal Gateway (Network Director) below (#4 and #7 show the same activity).

- The internal API Gateway invokes the downstream API, physical service.

- The internal API Gateway saves analytic information about the request/response, to MongoDB.

- Policy Manager stores the analytic information in MongoDB. See MongoDB below.

The response is returned to the consuming application.

Notes:

- For this scenario to work well, the database and the PM/CM containers must be in the same physical location.

- The above diagram includes an external consumer, accessing the deployment from outside the firewall, and an internal consumer such as a company employee.

Features

The example deployment above includes the following:

- Policy Manager and Community Manager running in the same container

- Job Scheduler

- OAuth Provider

- Reverse Proxies

- API Gateway/Internal Gateway (Network Director)

- External App Developer

- Elasticsearch

- MongoDB

Policy Manager and Community Manager running in the same container

This sample clustered deployment environment includes two containers that each have Policy Manager and Community Manager installed on them. Each container should be on a different machine.

Two containers are the minimum for a clustered environment, providing some redundancy.

For information on the features you'll need to install on the combined Community Manager/Policy Manager containers in this sample deployment scenario, see Community Manager/Policy Manager container features with access to MongoDB.

Job Scheduler

This sample clustered deployment environment includes a dedicated container for the background job scheduler (Quartz scheduler). This container can run all of the Policy Manager and Community Manager scheduled jobs.

The job scheduler cannot be clustered; it runs on only one container.

It's not necessary to have the job scheduler in its own container, completely separate from other features. However, by separating it out, you can help ensure that the scheduled jobs, which can include a lot of data processing, can run without affecting other containers.

Whether the container that's running the job scheduler is on a separate machine or not, we recommend allocating plenty of memory for the scheduled jobs. For example, 2 GB of memory, 2 CPU, should be fine.

For information on the features you'll need to install on this standalone container, to add jobs to the Quartz scheduler, see Scheduled Jobs container features.

Note: There are other scheduled jobs that are run on each container. For example, Network Director has a job scheduler that is responsible for reaching out to Policy Manager to get updated information on which APIs to load. These scheduled jobs are not separate installation features, they are built in to each container.

OAuth Provider

OAuth provides the framework for authentication and authorization, whether you use the Akana API Platform's OAuth Provider or an external OAuth Provider.

Note: If you're using PingFederate or the platform's inbuilt OAuth Provider, you can use referenced bearer tokens or JWT bearer access tokens. All other OAuth Providers require JWT.

If the platform's inbuilt OAuth Provider is used, it connects directly to the database.

For information on the features you'll need to install on the standalone OAuth container, or the container where you want the OAuth feature to reside, see Standalone OAuth container features.

Reverse Proxies

The reverse proxies provide a way for users to access the containers that are behind the firewall. In this example, the reverse proxies perform two functions:

- Allowing apps and external consumers external access into the CM containers.

- Allowing apps and external consumers to reverse proxy into the OAuth provider.

The platform doesn't provide reverse proxy functionality. You can use a third-party product such as HAProxy, F5, or NGINX.

API Gateway/Internal Gateway (Network Director)

This sample clustered deployment environment includes four Network Director containers:

- External Gateways: an external cluster of two, outside the firewall

- Internal Gateways: an internal cluster of two, inside the firewall.

In this sample scenario, the external Network Director instances call the internal Network Director instances before calling the API. It's not necessary to configure your deployment this way, but it's a clean approach and avoids opening direct access to your internal network from your DMZ.

The Network Director communicates with Policy Manager and relays the messages downstream to the API.

For information on the features you'll need to install on Network Director containers, see Standalone API Gateway (Network Director).

External App Developer

The external app developer accesses the Community Manager developer portal via the reverse proxy. Community Manager offers design-time features to allow the app developer to define and manage apps and request access to APIs.

Elasticsearch

This sample clustered deployment environment includes two Elasticsearch nodes, the minimum for redundancy.

The two Elasticsearch nodes should be on separate machines, for failsafe purposes; however, they needn't be dedicated machines. For example, you could run Elasticsearch along with each of your Community Manager/Policy Manager containers.

Install Elasticsearch as described in Installing and Configuring Elasticsearch.

MongoDB

This sample clustered deployment environment includes three MongoDB instances, the minimum for sharding. It's a good idea to do sharding if you have multiple data centers. For more information, see API Gateway Multi-Regional Deployment.

To run MongoDB, you'll need to install:

- Akana MongoDB Support (Plug-In). Must be installed on the Policy Manager and Community Manager containers.

Note: In addition, if you're using MongoDB, the Network Director should be configured to use a remote writer. In the Akana Administration Console, Configuration > com.soa.monitor.usage:

- usage.local.writer.enabled is true by default. Set it to false.

- usage.remote.writer.enabled is false by default. Set it to true.

Container features for this sample deployment

To create the above deployment, you'd need to install the features below in the various containers.

- Community Manager/Policy Manager container features with access to MongoDB

- Standalone API Gateway (Network Director)

- Standalone OAuth container features

- Scheduled Jobs container features

Community Manager/Policy Manager container features with access to MongoDB

In each CM/PM combo container shown in this sample deployment, install the following features:

- Akana Policy Manager Console

- Akana Policy Manager Services—this feature bundle includes the Akana Scheduled Jobs feature which adds jobs to the Quartz scheduler. It also includes Akana Security Services. This feature is needed on all containers except Network Director.

- Akana Community Manager

- Akana Community Manager Scheduled Jobs

Install the following plug-ins:

- Akana MongoDB Support (Plug-In) (if using MongoDB for analytics)

- Akana Community Manager Policy Console

- Akana OAuth Plug-In (must be installed on the Community Manager container if OAuth is installed on a separate container)

- One or more of the following (at least one theme is required for Community Manager):

- Akana Community Manager Default Theme

- Akana Community Manager Hermosa Theme

- Akana Community Manager Simple Developer Theme

Note: Simple Dev theme is deprecated in version 2020.2.0, and will be removed in a future release.

Standalone API Gateway (Network Director)

Install the following feature on each container running Network Director:

- Akana Network Director

Install the following plug-in:

- Akana API Platform ND Extensions Feature (needed for API analytics and Akana API Platform OAuth and security policies)

Standalone OAuth container features

If you are using the Akana API Platform as your OAuth Provider, install the following features on the standalone OAuth container, or the container where you want the OAuth feature to reside. These are not required if you're using an external OAuth provider.

- Akana OAuth Provider

- Akana Community Manager OAuth Provider

Install the following plug-in:

- Akana API Platform ND Extensions Feature (needed for API analytics and Akana API Platform OAuth and security policies)

Note: If you install OAuth on a separate container from Community Manager, make sure the Akana OAuth Plug-In is installed on the Community Manager container. This is needed so that you can configure OAuth via the Community Manager developer portal.

Scheduled Jobs container features

If you want to run the scheduled jobs features, that add jobs to the Quartz schedule, in a standalone container, install these features::

- Akana Policy Manager Services—this feature bundle includes the Akana Scheduled Jobs feature which adds jobs to the Quartz scheduler. It also includes Akana Security Services. This feature is needed on all containers except Network Director.

- Akana Community Manager Scheduled Jobs

Install the following plug-in on the Scheduled Jobs container:

- Akana OAuth Plug-In (if the Community Manager Scheduled Jobs feature is installed on a separate container from Community Manager, must be installed on the separate container)

Optional: Akana OAuth Plug-In (must be installed on the Community Manager container if OAuth is installed on a separate container)

Note: There are other scheduled jobs that are run on each container. For example, Network Director has a job scheduler that is responsible for reaching out to Policy Manager to get updated information on which APIs to load. These scheduled jobs are not separate installation features, they are built in to each container.